This collaboration between Interactive Media Arts (IMA) and Franka Robotics investigates the creative potential at the intersection of art, technology, and robotics. Over the course of the Spring 2025 semester, Andy Garcia, Assistant Arts Professor at IMA, together with research assistants Taojie Zhang and Evan Xiao, engaged in a hands-on exploration of robotic expression using the Franka Research 3 arm.

With no prior experience in robotic control systems, we began by learning the fundamentals of robotic manipulation through the Desk interface provided by Franka Robotics. Gradually, we developed a deeper understanding of the arm’s capabilities and constraints. Once we became comfortable with this method of programming, we transitioned to the franky-control Python library, which allowed us to fully control all aspects of the robotic arm and integrate additional libraries such as TensorFlow.js for computer vision and SAC reinforcement learning algorithms. This learning process was not only technical but also conceptual, prompting us to reflect on questions of agency and embodiment within the context of artistic practice.

Three distinct art projects emerged from this research, each building on the last in terms of complexity and conceptual ambition:

Project 1: Contemplation

The first project involves a pre-programmed sequence in which the robotic arm rakes fine sand, evoking the meditative aesthetics of a traditional Japanese Zen garden. The robot repeatedly drags lines through the sand, manipulates stones, and then undoes everything and starting over in an infinite loop of precise motion. There is no deeper meaning, just contemplation: a simple, mesmerizing, endless act of a machine raking sand.

Project 2: The Mimic Brush

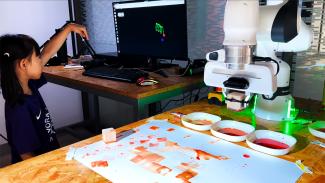

For the second project, we introduced computer vision into the equation, transforming the robotic arm into an interactive painting tool. Using hand pose detection from TensorFlow.js paired with a camera, we track visitors’ hand movements in real time, allowing them to guide the robot’s brush through three-dimensional space. Each gesture translates into motion—forward, back, up, down—as the robotic arm holds a watercolor brush and moves across the canvas. The system responds with precision, but also with a slight delay that forces participants to slow down and adapt to the robot’s timing.

During the IMA show, the installation became a lively and welcoming point of interaction. Visitors of all ages, including young children, stepped up to engage with the robotic painter. The resulting brushstrokes were abstract and playful. Rather than striving for technical precision, the piece emphasized co-creation, accessibility, and embodied control.

Project 3: Signals of the void

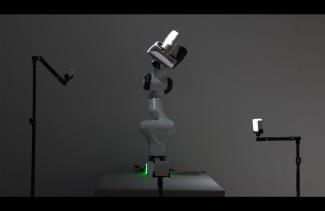

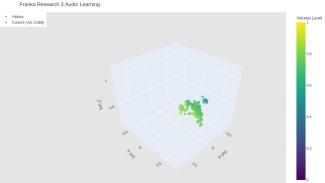

In the final project, we explore the emergence of behavior and movement through the application of machine learning algorithms and reward-based systems. Three speakers are placed around the robotic arm, each sequentially emitting sound as a spatial signal. Every 30 minutes, the audio source shifts to a different speaker, prompting the robot to begin a new phase of exploratory movement.

The robot’s contortions are not pre-programmed or constrained to fixed paths. Instead, its motion emerges from the shifting auditory landscape mentioned earlier, resulting in a slow, ambient navigation of space. As it moves, the robot gradually constructs a spatial map based on perceived loudness.

Using principles of reinforcement learning, we guide the robot’s behavior through a simple reward system. At each step, the robot slightly adjusts the position of its seven joints, then performs a sound analysis using the microphone embedded in its gripper. If the sound at the new position is louder than the previous one, the robot receives a reward; if it is quieter, it does not. Over time, this incentivizes the robot to seek out and “pinpoint” the location of the sound source. Additional rewards are given for discovering new joint configurations that lead to louder signals, encouraging a balance between focused searching and exploratory movement.

This robotic dance is not about productivity or mimicry, but about a slow, continuous search. The robotic arm becomes a listening body, stretching outward into the unknown, attentive to faint signals, and learning quietly from its surroundings.

Together, these projects serve as prototypes for rethinking how robotic systems can participate in creative processes, not just as tools, but as tools, co-performers or agents. Each experiment reflects a different dimension of this potential: from programmed repetition to interactive responsiveness, and finally, to emergent behavior shaped by sensory feedback and machine learning.

This initiative embodies our commitment to interdisciplinary experimentation, blending art, technology, and research. It also affirms the vital role that artistic inquiry can play in shaping the future of human-machine interaction.

Thanks to Franka Robotics for their collaboration and support, which made these projects possible and opened new spaces for artistic imagination within the field of robotics.